When I went to university me and some friends spent time on practising algorithm implementations for the national programming contests that were held each year. The concept was (and still is) to solve about ten problems in a couple of hours time using a programming language of choice. The source code is submitted to an automatic judge that compiles the code and feeds the executable with test data and verifies that the program delivers the correct output expected for the input.

After being out in real work I have noticed that I have had good use of these earlier exercises. One of the most important lessons is to know by heart the complexity of different algorithms and when to use a simple brute force method and when a more advanced algorithm must be implemented.

I've recently done a couple of problems per night just for the fun of it. It feels exciting to program on a hard problem using just 20 lines of code instead of coding 1000 lines of boiler plate which I guess many of us do at our every day works.

Without trying to indicate that I'm an expert on complex algorithms, here are some things I think are valuable when getting serious about algorithm design and solving harder programming challenges.

If you didn't take a course on complexity theory or don't remember the basics I think this is a must to have in the toolbox for every programmer. This implies knowing which problems are inherently hard. By hard we dont mean hard to implement a solution for, but hard in the sense that the resources (CPU time or memory) scales bad with larger and larger sets of input for the problem.

The standard way of measuring the complexity of an algorithm is to resolve the algorithms Ordo or Big-O function. The formal definition is that this Big-O function O() satifies

Another problem, consider finding a value in a sorted tree (or in a sorted array for that matter via binary search). In this algorithm, each inspection of a node in the tree is proportional to one operation. Each inspection will cut the size of the tree in half so only one of the halfs will be needed to be examined in the next operation. This means that the number of operations needed to find the value will be less than the number of values in the tree. So we have an algorithm that is faster than the linear g(n) = n. In fact this class of problems are solved in logarithmic time proportional to the size of the input, or O(log(n)).

So going back to programming challenges, by knowing a lot of algorithms you might figure out which one will suit the particular problem. First of all, always find the bounds of the input data for your problem. What are the most extreme inputs we might expect to handle? Then, assuming the worst case scenario, what is the most easily implemented algorithm we can get away with within the requirements on time and space utilization?

For example, to sort an array of at most 10 000 000 values, bubble sort would require something proportional to n×n = 100 000 000 000 000 operations. Even with multi cores, this will not be fast. So by knowing that for example the heap sort algorithm is O(n log n) we know that this algorithm will sort the array proportional to 10 000 000 × 7 = 70 000 000. Definitively something that can be accomplished within a fraction of a second.

But assume that we know that the values in the array are all in the range of 1 to 1000 000. This extra clue can give us the hint to consider bucket sort which has larger memory requirements than heap sort but can perform the sort in linear time proportional to the input size, O(n).

The above example of sorting shows that it is essential to know a lot of algorithms. By "knowing", you don't need to know the implementation of them. You can always search the web for actual implementations, pseudo code or even find them implemented in some library. The important part is to know that they exist and for what particular problems they are useful and what requirements they put on input data, time and memory usage so you can make a decision on whether they are applicable for your problem.

There are specific algorithms targeting very specific problems such as Dijkstra's algorithm that solves the single-source shortest path problem for a graph with non-negative edge path costs. This is a rather common problem within the class of graph problems so it can be handy to know by heart, but maybe more interesting is to analyze what makes Dijkstra's algorithm successful. It is a typical example of Dynamic Programming (DP) which is a general approach of solving many hard problems by breaking them into smaller and simpler subproblems and solving the larger problem by combining solutions of smaller problems. Other general ideas that can be useful in many different contexts is divide and conquer algorithms, greedy algorithms and backtracking.

Sorting

When considering which algorithm to choose, think about what requirements you have and the expected input. For example, some algorithms are stable, where stable means that if two objects are considered equal (depending on the comparison function used when sorting), the relative order of them will be preserved. For example the

quick sort algorithm is much more complicated to implement if it must behave in a stable manner. Furthermore, some algorithms have very nice average Big-O requirements but may deteriorate to

O(n²) if the values to sort already are sorted. Ordo funtioncs are always given for worst case scenarios.

Merge sort is a popular

O(n log n) sorting algorithm implemented in Perls sorting library.

Timsort, O(n log n) is based on merge sort but can run in linear time if the input is already sorted. Timsort is deployed in JDK 7 and Python libraries.

Heap sort has even less memory requirements than merge sort and runs in the same time asymptotically.

If you have the possibility to sort in parallel there are implementations of for example merge sort than can give even better performance.

UVA problems that could be relevant to practise on:

299 - Train Swapping,

10252 - Common Permutation.

Graph problems

The most important thing to consider when attacking graph problems is how to traverse the graph. Depth First Search (DFS) is easily implemented with recursion but can have problems with non termination if the graph's size is similar to the internet and if recursion is made in languages like Java or C, the stack will overflow in a certain amount of iterations (stackframes). Other languages like Scala can avoid new stack frames if the code is written tail recursively which internally is unwrapped as a while loop. In older languages, you must know how to convert your recursive solution to an imperative loop solution when search depths become substantial.

Adaptations to DFS can be for example

iterative deepening, popular in implementations of

game trees in for example Chess engines. This means that the search is performed to deeper and deeper depths in iterations making it possible to abort if for example a certain time constraint is broken. Useful when the branching factor of the searched tree is large.

Alpha-beta pruning is a clever way to reduce the search space by limiting the branching factors in DFS in game trees.

Typical uses of DFS are finding a solution out of a maze, planarity testing, finding connected components of a graph, deciding biconnectivity of a graph and topological sorting of a directed graph.

Breadth First Search (BFS) is based on a queue data structure and is easily implemented without recursion. Some typical uses of BFS is to find all nodes within a connected component, finding the shortest path between two nodes of an unweighted graph and to find the maximum flow in a flow network.

Some practise problems on general graph and tree traversals are

167 - The Sultan's Successors,

115 - Climbing Trees,

297 - Quadtrees.

Combining DFS or BFS with ideas like greedy selection and DP and you have some of the most famous algorithms below.

Dijsktra's algorithm - Find the shortest path from a specific node to all other nodes in a graph with non-negative edge costs. The original algorithm from 1959 runs in

O(|V|²) where |V| is the number of vertices in the graph. An improvement uses a Fibonacci heap as priority queue which runs in

O(|E| + |V| log |V|) where |E| is the number of edges. However, implementing a Fibonacci heap is work on its own so only use it if necessary.

Bellman–Ford algorithm - Similar to Dijkstra's algorithm, Bellman-Ford finds shortests paths in a graph but can also handle negative edge weights (as long as there are no negative cycles since that means there is no shortest path).

Prim's and Kruskal's algorithms can be used to find a minimum spanning tree of a graph. That means, a tree that includes all vertices that are connected within the graph. These algorithms have similar time complexity as Dijkstra since the

O(|V|²) can be improved to

O(|E| + |V| log |V|) with a Fibonacci heap. Prim's algorithm is a good example of a greedy algorithm.

Floyd-Warshall's algorithm finds all shortest paths costs between all nodes in a graph with positive or negative edge weights (no negative cycles though). The simplest implementation can be extended to also keep a backtracking table so it is possible to not only find the path costs but also reconstruct the actual shortest path between all nodes. FW is a typical example of Dynamic Programming. FW runs in

O(|V|³).

A good practice problem is

104 - Arbitrage.

Sequence and string problems

There are a lot of problems involving finding longest sequences or subsequences in different lists. These lists can be arrays of numbers or characters written as strings. Often a combination of sorting and some specific algorithm is best medicine.

The Longest Increasing Subsequence algorithm (LIS) will in the list 0, 8, 4, 12, 2, 10, 6, 14, 1, 9, 5, 13, 3, 11, 7, 15 figure out that the longest sequence in ascending order must be 0, 2, 6, 9, 13, 15. Note there can of course be other equally long sequences. LIS runs in

O(n log n) since its implementation is based on binary search.

The

Longest common substring problem means to find out that "XYZ" is the longest common substring in the three strings "XYXYZ", "YXYZX" and "XYZYX". There are two types of algorithms for this, one builds a suffix tree and one is based on dynamic programming.

The problem of finding the minimum number of edit operations needed to turn one string into another is addressed by the

String-to-string correction problem. A side effect of the algorithm for this is that by storing the deltas you can for example store many very large quite similar strings by only keeping one copy of the first string and then the small deltas of the edits needed to turn it into the other strings. An application would be to store for example DNA sequences or similar in this way. The

Levenshtein distance is the number of changes needed to turn a string into another and can solve the String-to-string problem via a DP approach.

Practise problems:

111 - History Grading.

Number theory

Math problems can sometimes feel academical but there are important real life applications considering for example prime numbers and cryptography or how many ways something can be done using combinatorics.

Problems concerning prime numbers are common. To verify whether a certain number is prime (primality testing), different algorithms can be used. For moderate bounds on input, simply test for divisibility up to √n. Once

n is large you might need faster ways to test for primality. One of the fastest algorithms which runs in polynomial time is the

AKS test which can be implemented in

O(log(n)6).

A tougher problem is to factor a number into its prime factors. The most difficult numbers are

semiprimes which are the product of two primes. No polynomial algorithm is known to do this which is the base of RSA cryptography. If you know more about the properties of the composite number there can be shortcuts to factor the number. Numbers on the form

re±s can be efficiently factored using the

Special Number Field Sieve algorithm if

r and

s are relatively small. From this the

General Number Field Sieve is derived which is the most efficient classical algorithm known for factoring integers > 100 digits.

There are problems where you need to find the

greatest common divisor (

gcd) of two numbers. This number can be found using the Euclidean algorithm which can be implemented as below where

% is Javas syntax for modulus.

int gcd(int a, int b) {

if (b != 0) {

return gcd(b, a % b);

}

return Math.abs(a);

}

Once we have gcd, we can easily calculate the

least common multiple (lcm) of two numbers as

Another problem that you will find in some of the UVA challenges includes raising large powers. If you are to calculate

ab and

b is large the naive solution would include

b number of multiplications of

a. A much faster way is to use repeated squaring which means that you square the results to make the number of multiplications logarithmic in relation to

b. Some pseudo code for this would be

/**

* Calculates n to the p power, where

* p is a positive number.

*/

LargeInt power(LargeInt n, LargeInt p) {

if (p == 0) return 1

if (p == 1) return n

if (isOdd(p)) {

return n * power( n * n, (p - 1) / 2 )

} else {

return power( n * n, p / 2 )

}

}

There are some problems on calculating Fibonacci sequences. If you wind up with

Time Limit Exceeded (TLE) from the judge on these and you think you have made a really fast algorithm avoiding recursion and no double calculations, remember that factorials can be expressed in a closed form as well where

F(n) is the n'th number in the sequence.

Combinatorics

Variations on combinatorics is a common theme. Assume you have a box of

n items, in how many unique ways can you pick

k items?

0 <= k <= n.

If you remember your discreet mathematics this is solved using binomial coefficients (Pascal's triangle). The academic version is often described as a divisions of factorials.

This is not a practical implementations however. The formula can be rewritten as a product of terms better suited for an engineering implemention.

Example problems:

10219 - Find the ways !,

11401 - Triangle Counting

Probability theory

Knowing some basic probability theory is not only good for programming challenges. Besides the basic definitions and axioms (Kolgomorov) there are lots of interesting topics such as stochastic processes and variables, probability distributions, Bayes theorem and more.

Practise problem:

10491 - Cows and Cars

Dynamic Programming

Dynamic programming (DP) is a methodology for attacking complex problems by splitting the problem into simpler subproblems which solutions can be reused to solve the original problem. The problem must have the property of

overlapping subproblems. This means that the subproblems are occuring several times in the problem and can be reused in the DP approach. A simple example of this would be a function

f(n) to calculate Fibonacci numbers. Since

f(n) = f(n-1) + f(n-2), a DP approach would store each

f(x) to reuse previously calculated valued. The stored values are called "memo-ized".

In general, DP is a technique to speed up solutions of problems where you make brute force searches and cache intermediate results.

Let's examine a more complex problem where DP actually makes great difference.

"Suppose you have x dollars and want to figure out in how many unique ways you get change for this in the coins 1c, 5c, 10c, 25c and 50c."

The brute force approach would be to nest five for loops and iterate over the five different coins and count the number of valid combinations. A very simple algorithm but it would not scale well with larger amounts of dollars or if we had more types of coins increasing the number of nested for loops.

Let's start thinking from a dynamic programming perspective. Can we make the problem simpler? Let's assume we have solved the same problem (in some at this time unknown way) for 4 currencies. So we know how many combinations you could get x dollars in 1c, 5c, 10c and 25c. Could this help us? Yes, then we could deduce that the total number of combinations would be the sum of

0, 1, 2 ... n number of 50c coins where

n is the maximal number of 50c coins you could get out of x dollars. Each such number of combinations could be calculated by the already known function since it handles the other four coins.

Naturally, we can use the same approach to solve the problem for 4, 3 and 2 coins. The case of 1 coin is so simple that we can implement it without relying on any other assumed function.

Here's a recursive implementation of this idea.

private int combinations(final int[] change, final int maxCoins,

final int amount) {

/*

* Base case 1. If amount = 0, there is one combination -> no change.

*/

if (amount == 0) {

return 1;

}

/*

* Base case 2. If negative amount, no solutions

*/

if (amount < 0) {

return 0;

}

/*

* Base case 3. No change to work with -> no solutions

*/

if (maxCoins <= 0) {

return 0;

}

/*

* Here goes the recursion, sum all combinations using one coin less

* from the list of coins plus the number of combinations if we remove

* one of the largest coins from the total amount.

*/

final int c = combinations(change, maxCoins - 1, amount) + combinations(change, maxCoins, amount - change[maxCoins - 1]);

return c;

}

Call it with the number of available coins and the number of coins in the array to use together with the total amount of dollars (times 100 to get int values for cents).

int combs = combinations([1, 5, 10, 25, 50], 5, 500000);

This will work, but it will not be efficient. For each invocation that does not end in a base case, two recursive calls will be performed and the same subproblem will be recalculated over and over again.

The good thing is that we have divided the problem into specific overlapping subproblems. Now we can add the idea of dynamic programming. Add a cache that stores previous calculations as a base case to make the recursive solution only calculate subproblems that not have been encountered before.

private int combinations(final int[] change, final int maxCoins,

final int amount, final int lookupTable[][]) {

/*

* Base case 1. If amount = 0, there is one combination -> no change.

*/

if (amount == 0) {

return 1;

}

/*

* Base case 2. If negative amount, no solutions

*/

if (amount < 0) {

return 0;

}

/*

* Base case 3. No change to work with -> no solutions

*/

if (maxCoins <= 0) {

return 0;

}

/*

* Base case 4. This is already calculated, so DP finds result in cache

*/

if (lookupTable[maxCoins][amount] != -1) {

return lookupTable[maxCoins][amount];

}

/*

* Here goes the recursion, sum all combinations using one coin less

* from the list of coins plus the number of combinations if we remove

* one of the largest coins from the total amount.

*/

final int c = combinations(change, maxCoins - 1, amount,

lookupTable)

+ combinations(change, maxCoins, amount

- change[maxCoins - 1], lookupTable);

lookupTable[maxCoins][amount] = c;

return c;

}

This will perform efficiently and is it is easy to understand the logic. Unfortunately a problem with recursive solutions in imperative languages like Java is that deep recursions will run out of stack space. So to make this solution even more generic we should rewrite it using loops instead of in the more aestethic functional style.

private int[][] combinations(final int[] change, final int maxCoins,

final int amount) {

final int[][] lookupTable = new int[amount + 1][maxCoins + 1];

for (int i = 0; i <= amount; i++) {

for (int j = 0; j <= maxCoins; j++) {

if (i == 0) {

lookupTable[i][j] = 1;

} else if (j == 0) {

lookupTable[i][j] = 0;

} else if (change[j - 1] > i) {

lookupTable[i][j] = lookupTable[i][j - 1];

} else {

lookupTable[i][j] = lookupTable[i - change[j - 1]][j]

+ lookupTable[i][j - 1];

}

}

}

return lookupTable;

}

This function retrieves the all the memoized combinations and we find the solution to our problem as

final int[] change = { 1, 5, 10, 25, 50 };

final int[][] combs = combinations(change, change.length, maxDollars);

final int answer = combs[amountToChange][5];

In the UVA problem set you will often need to think from a DP perspective to create solutions that are fast enough for the input data and the time constraints.

Some good problems to practise on:

103 - Stacking Boxes,

108 - Maximum Sum

The particular coin change problem described above is available in several versions on UVA. Have a try at problem

147 - Dollars.

Divide and Conquer

Divide and conquer algorithms are based on multi branched recursion. It breaks down a problem into several sub problems and recurse on them. These can thereby be divided into subproblems until they are small enough to be easily solved. Then the solutions are used to generate the answer to the complex problem. Quicksort, merge sort, discrete Fourier transform (FFT), top down parsers used in syntactic analysis are D&C algorithms.

Another example that can be useful to know in the UVA problem challenges is that there is an algorithm called Karatsuba which multiplies two large integers (with thousand or millions of digits) fast. It runs in

O(3nlog23) which is notably faster than the

O(n2) method we learned in school. Karatsuba is a D&C algorithm. Binary search could be called a simple form of D&C.

Suitable practise problems:

10341 - Solve It

Greedy algorithms

A greedy algorithm makes local optimal choices at each stage during the algorithm in hope of producing a global optimum. Many problems will fail to do so with a greedy approach since the complete search space is not visited. But for certain problems greedy selection works well and can therefore create fast solutions. In other cases where the optimal solution is not possible to calculate in an efficient way, a greedy solution might come up with a decent approximation or bounded result.

Examples of greedy algorithms are the construction of a Huffman tree during Huffman coding or Kruskal's algorithm for finding a minimum spanning tree of a graph.

Practise problem:

11389 - The Bus Driver Problem

Backtracking

Backtracking is a technique that finds one or all solutions to a problem by trying different candidates and abandon those branches that breaks the constraints of the problem which means that the current path cannot lead to a valid solution of the problem.

An example where backtracking would be suitable is a solver for a Sudoku puzzle. By doing a depth first search and inserting new numbers in empty positions and for each insertion checking whether the new number breaks any constraints of the rules of Sudoku, the board can be iteratively filled with valid numbers. As soon as a number violates the rules and all numbers have been tried in the current position, the algorithm must backtrack to the previous position and try another number.

Examples where backtracking is suitable are puzzles and combinatorial optimization problems such as the knapsack problem.

A good practise problem is the classic Eight Queens puzzle where you should position eight queens on a chessboard without any queen threatening another. Here's a few modifications on that theme on the UVA site

11085 - Back to the 8-Queens,

167 - The Sultan's Successors.

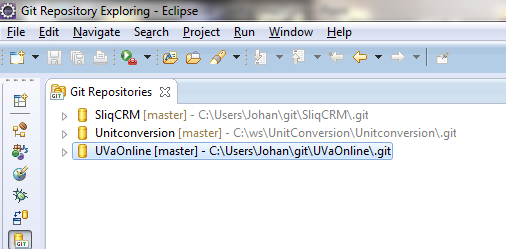

UVA Online Judge

The online judge system at

http://uva.onlinejudge.org/ accepts code in C/C++/C++11/Java. Some hints for beginners might be to start with an easy problem to get to know the judge. Good choices might be problem

10018 - Reverse and Add or

11172 - Relational Operator.

Important, read the input and output constraints carefully. For example, if the input consists of integers and are between 0 and 4,294,967,295 remember that a signed 32 bit integer will overflow so you should use a 64-bit integer or an unsigned int depending on the language you code in.

Output format matters, so be careful with trailing spaces and linefeeds. Sometimes you simply must try with or without an ending newline since the problem description won't tell which one is correct unfortunately.

Create a good template which you can copy when starting on a new problem. If you code in Java you will probably have everything you need in the standard library such as BigIntegers and much more. If you code in C/C++ it can be good to add some routines for handling arbitrary large numbers to your template.

If you get stuck on a problem try to create test cases for all the border cases allowed in the input. Try the worst possible input and also the smallest possible input to see that you get reasonable output.

Many basic problems can be solved with simple ad hoc brute force solutions but the more interesting problems with greater time complexities will perform very badly with naive solutions. The problems are often devised so that brute force solutions will not be able to perform within the time limits. However, once you've created a fancy solution based on DP or some other approach it is not always self evident that the algorithm is correct. In those cases it can still be valuable to run the outputs from your algorithm against a quickly coded brute force solution to verify that they produce the same output.

If you still is stuck and can't figure out why the online judge gives your solution "Wrong Answer" you can check the forums where many helpful programmers gives hints or test data to test your code against.

Good luck!

Edit 2014-05-01:

I got feedback on whether I could recommend any books for those interested to dig deeper. I got two books which I bought ten years ago which I still think should be relevant.

Programming Challenges: The Programming Contest Training Manual by Skiena and Revilla is exactly what it sounds like. A walktrough of different types of programming challenges and techniques for solving them.

Algorithms by Sedgewick and Wayne is more a general CS book and a good read.

.gif)